Have you ever fundamentally disagreed with someone on a personal issue that both of you were very passionate about? Climate change, reproductive rights, election integrity, gun reform… pick a topic. How did that go? We’ve all been there…. on both sides of the debate. On the one hand, our values and convictions are what make us who we are. On the other hand, acknowledging we may be wrong about a deeply-held belief and integrating new information and evolving into a more enlightened view of the world is the hard part of personal growth.

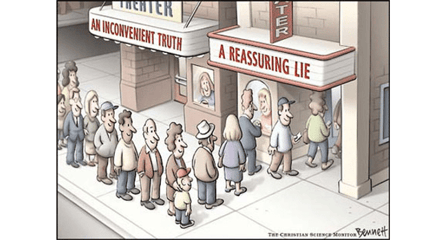

Generally, the most vocal defenders of any position are the least willing to consider any information that gives credence or value to a different position. Presenting actual facts and data that may be intended to offer a different point of view or challenge their thinking just makes them dig in their heels and even more sure of their “rightness.”

We’d like to believe that we are logical beings and when our beliefs are challenged with facts, we incorporate the new information into our thinking. The truth is that when our convictions are challenged by contradictory evidence, beliefs get stronger. Once something is added to our cognitive catalog of beliefs, it’s intricately woven into our sense of self.

When we get information that is consistent with our beliefs, our natural tendency is to lean in and add it as reinforcement. (See, I knew it!) Over time, we become increasingly less critical of any information that “proves” you’re correct.

But when the information is dissonant or contrary to our beliefs, we look for any reason to dismiss it. We find it biased, flawed, or not even worthy of consideration. (What a moron!)

When people are forced to look at evidence that conflicts with their mental models, the automatic instinct is to criticize, distort, or dismiss it. In that process, they recall information stored away in their memory banks, experience negative emotions from the “threat” to their identity, and form stronger neural connections. And just like that, their convictions are stronger than before.

Access to information is “on demand” tailored to your preferences, political identity, purchases, music and memes – a nonstop stream of information confirming that which you believe to be true without ever leaving the safety of your filtered bubble. Online algorithms, cookies and tracked advertising have created the perfect conditions for a subconscious psychological beast responsible for this phenomenon.

Here are three subconscious biases that are likely undermining those tough conversations.

The Backfire Effect

Decades of research shows how fervently we protect beliefs when confronted with information that conflicts with them. It’s an instinctive defense mechanism that kicks in when someone presents information – even statistically sound research or data – that disputes your position. Those facts and figures backfire and only strengthen our misconceptions.

A good example of the backfire effect was discovered in a 2010 study. Researchers asked people to read a fake newspaper article containing a real quotation of George W. Bush, in which the former president asserted, “The tax relief stimulated economic vitality and growth and it has helped increase revenues to the Treasury.”

In some versions of the article, this false claim was then debunked by economic evidence: A correction appended to the end of the article stated that in fact, the Bush tax cuts “were followed by an unprecedented three-year decline in nominal tax revenues, from $2 trillion in 2000 to $1.8 trillion in 2003.” People on opposite sides of the political spectrum read the same articles and the same corrections. In both groups, when new evidence threatened their existing beliefs, they doubled down. The corrections backfired. The evidence made them more certain that their original beliefs were correct. But researchers found that conservatives who read the correction were twice as likely to believe Bush’s claim was true as were conservatives who did not read the correction. Why? Researchers theorize it is because conservatives had the strongest group identity than the other groups. They were subconsciously defending their group by believing the claim.

Here is a more recent example of the backfire effect perfectly illustrated at the 2022 NRA convention.

I had a good conversation with this guy about hammers. https://t.co/zCjj4mgsue

— Jason Selvig (@jasonselvig) May 31, 2022

Confirmation Bias

A second cousin to the backfire effect is confirmation bias - the unconscious force that nudges us to seek out information that aligns with our existing belief system. Once we have formed a view, we embrace information that confirms that view and dismiss information to the contrary. We pick out the bits of data that confirm our prejudices because it makes us feel good to be “right.”

There are times that we want to be right badly enough that we become prisoners of inaccurate assumptions.

Consider a 2018 study conducted with more than 1,000 residents living in South Florida intended to assess how they might perceive the vulnerability of their property and their communities to severe storms. Researchers asked about their political affiliation and their support for policies such as zoning laws, gasoline taxes and other measures to address climate change.

Half of the participants received a map of their own city that illustrated what could happen just 15 years from now at the present rate of sea level rise if there were a Category 3 hurricane accompanied by storm surge flooding. Those who had viewed the maps were far less likely to believe climate change was taking place than those who had not seen the maps.

Furthermore, those who saw the maps were less likely to believe that climate change increases the severity of storms or that sea level is rising and related to climate change. Even more dramatically, exposure to the scientific map did not influence beliefs that their own homes were susceptible to flooding or that sea level rise would reduce local property values.

Consistent with national surveys, party identification was the strongest predictor of general perceptions of climate change and sea-level rise. Of the group that were shown the maps, those who classified themselves as Republicans had the strongest negative responses to the maps. Researchers theorize that was because they had the strongest identity ties to their "tribe" than the other groups.

The Egocentric Bias

Egocentric bias is the tendency to rely too heavily on one's own perspective and have a higher opinion of oneself and one’s status than is reality. This self-centered perspective gives rise to many others, including overestimating how successfully we communicate with others, dismissing different points of view as wrong, and overestimating how much others share our attitudes and preferences.

In the fall of 2008, the Rand Corporation’s American Life Panel asked respondents how likely they were to vote for Barack Obama, John McCain, or a third candidate in the upcoming presidential election. Then, they were asked how likely each candidate was to win the election. The more strongly people favored a certain candidate, the higher they estimated that candidate's likelihood of winning the election. For instance, those who strongly preferred Barack Obama predicted that he had a 65% chance of becoming the president, while those who preferred another candidate estimated Obama only had a 40% chance of victory.

The results held true for both candidates and were replicated in the 2010 state elections.These patterns persisted no matter how the results were stratified: respondents of every age, race, and education level thought that their preferred candidate was more likely to win the election. When people changed their candidate preferences over time, their expectations about the outcome of the election shifted as well.

Although at a theoretical level we are aware that other people can have different perspectives to our own, egocentric bias often results in imposing our own perspectives to others even when it is unwarranted. In addition, this bias can influence ethical judgements to the point where people not only believe their views are better than others but also the morally sound perspective thereby judging anyone who disagrees as immoral.

Research shows that it takes approximately 250 milliseconds to decide whether something is right or wrong, and we make instantaneous moral evaluations even when we cannot explain them. Moral judgements - like any other judgements – are highly prone to egocentric bias.

A 2020 study investigated whether people perceive actions as morally right when they serve the interests of their group but as morally wrong when they serve outgroup interests. Researchers found that egocentric bias regarding moral judgements about ingroup members would be especially strong among participants who are defensive about their group identity (collective narcissism). In one of the experiments, they asked English and Polish participants to judge the morality of decisions made by ingroup and outgroup members and found that group identity influenced participants' moral judgements but only those high in collective narcissism. In a different experiment, they asked American participants (Democrats and Republicans) to evaluate the morality of the collective US Senate decision to nominate Brett Kavanaugh to the Supreme Court. Republican voters found the decision of the US Senate overwhelmingly upstanding and moral while Democrats condemned it.

Unless we intentionally examine a situation from a completely different viewpoint, the egocentric bias is often the culprit of the sort of righteous indignation that brings civil conversations to a screeching halt. When the egocentric bias is in high gear, one sees his or her perspective as the only correct perspective. Furthermore, those who disagree with that perspective or offer an alternative perspective are not only wrong, but also immoral.

It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so.” - Mark Twain

Overcoming these biases and evolving into a more intelligent and enlightened understanding of the world is the cornerstone of intellectual humility – the recognition that the things you believe might be wrong. And this requires intention and actual changes in the physiology of the brain to accept information that challenges a deeply held belief.